Robot Operating System (ROS)

What is Semantic Map?

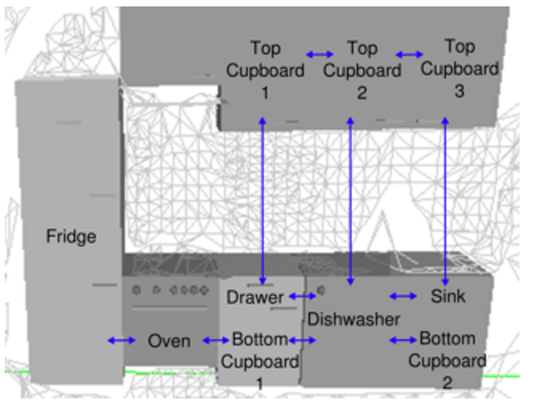

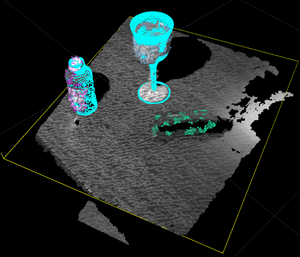

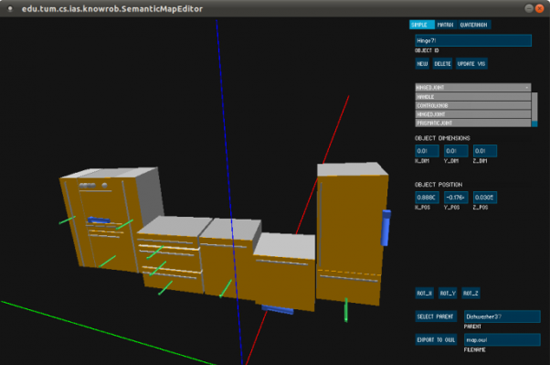

Semantic Map is a format in which we store our knowledge. Semantic map correlate the knowledge of objects and scenes to a location in the map, which means that establishing the relationship between the environment and objects.(fig 1.1, fig 1.2) Additionally, the Semantic Map can be used when doing some advanced tasks. Modules that use Semantic Map directly include Navigation, Collision Avoidance, Localization and Mapping, Path Planning, and Human Robot Interaction, etc.

(fig 1.1)

(fig 1.2)

Why we need Semantic Map?

The main difference between service robot and industrial robot is that service robot is usually faced with complicated and dynamic environment, while industrial robot is mostly used under a well-controlled and stable working environment. Therefore, service robot demands far more profound perception of the environment than the industrial robot. It all relys on high quality of cognition to execute the commands correctly and precisely in a dynamic environment, including Motion Planning, Localization, Obstacle Avoidance, Arm Navigation.

Behind every human movements, there lies knowledge. If we want the robot to perform like a human, and provide human-oriented services, then the robot should be able to perceive the world similar to human beings. While Semantic Map is the model that is closest to the way people perceive the world.

Imagine that if we want an intelligent robot to clean the laboratory for us. If we want the robot to do the cleaning task well, it at leat need to have the map of the lab. Secondly, it also need to know where the cleaning tools are on the map. These two elements that the robot at least need to know in order to finish the job correctly, form a basic Semantic Map, that is sotre the information and knowledge in the form of a map.

Platform to impelement Semantic Map

ROS(Robot Operating System)

ROS is an open source robot development platform. All the main research area such as Navigation, Manipulation, Perception, and Cognition can have a platform to integrate together. Besides, all sesearchers around the world can share the research resources, algorithms, and their achievements on the platform.

If we want to build up a service robot by ourselves now. We don’t need to handle the controllers and drivers of the robot and even build up a 3D physical simulation environment on our own! ROS provide us with a stable platform for simulation and testing and all the research resources from the top universities around the world, so that we can focus on improving the shortcomings and on developing the techniques where we have advantage.

For now, ROS has supported many kinds of programming languages. Those having been verified to be stable include C++ and Python. The experimental ones, which mean that the language has its own corresponding client library but haven’t been verified to be stable, include Lisp, Octave, Java.

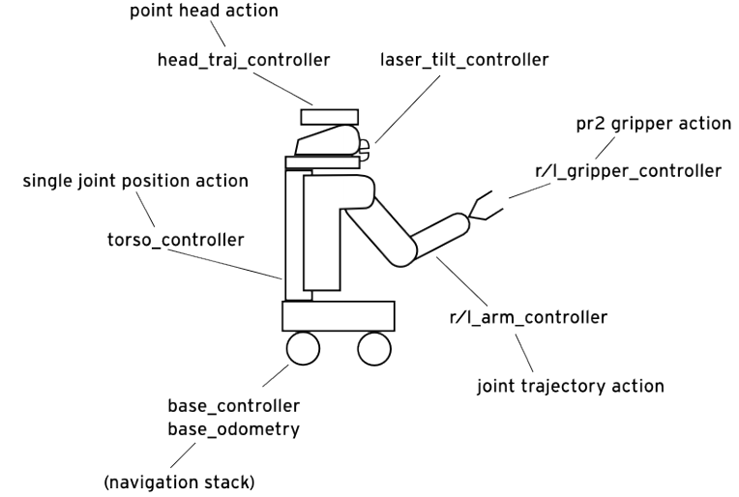

PR2(Personal Robot 2)

PR2(fig 3.1) is a robot designed by US company Willow Garage. This robot provides us with a stable hardware platform on which we can test our algorithm . We don’t have to deal with miscellaneous problems of building up a hardware architecture(fig 3.2), and focus on the development of high level softwares and applications.

(fig 3.1) (fig 3.2)PR2 hardware ahcitecture

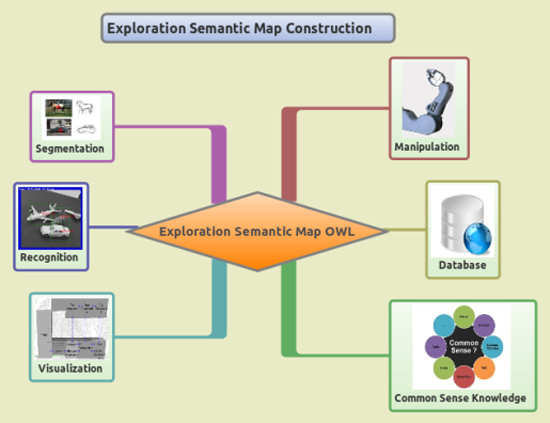

Techniques

The robot needs several basic functionalities so that it can interact with the environment.(fig 4.1), including Perception—to perceive the world, Navigation—to move in the world, Manipulation—to interact with the world, Database—to store the knowledge, and Planning—to use the knowledge and the basic functionalities to finish a task. These basic functionalities are as well the modules we need to build up the semantic map.

(fig 4.1)System of a mobile robot

(fig 4.2)Semantic modules

Perception

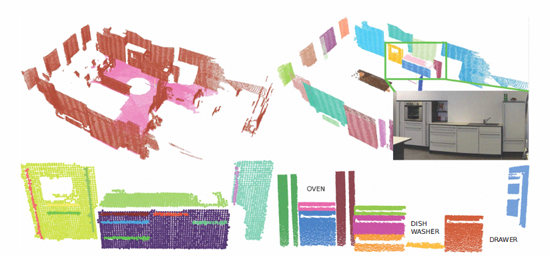

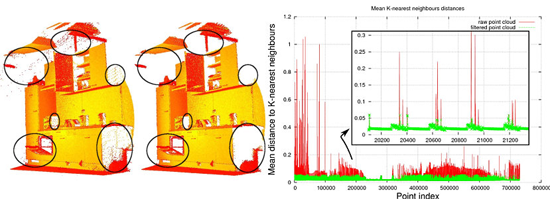

In these years, 3D sensor has been popular, which let us to have the additional depth information about the environment. The important tool to process the 3D image is Point Cloud Library.(PCL)(fig 4.3) PCL use the data structure PointCloud to represent the 3D image, which is more convenient for image processing than ordinary mesh format.

(fig 4.3)

We use PC to do some simple image processing such as segmentation(fig 4.4), and down sampling(fig 4.5), etc

PCL uses RANSAC algorithm to find a matching model in the image and segment the matching part, which will then be extracted out to do clustering, and this cluster is the part we want.

The underlying algorithm of down sampling is to cut the images into cubics, and then get the centroid of the points in the cubic as the representative.

(fig 4.4)Segmentation (fig 4.5)Down Sampling

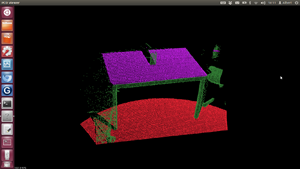

After retrieving the sensor datas, we will start the object recognition process. The module we use is Tabletop Object Detector, which includes two parts –Tabletop Object Segmentation and Tabletop Object Recognition. This module is meant for the scenario that contains symmetric objects on the table.

The step that we run the Tabletop Object Detector module is as follow. First we use Tabletop Object Segmentation to segment out the largest plane—the table in the environment, and then cluster the rest of the points. These clusters will then be sent to the Tabletop Object Recognition to go through the recognition process.

The Tabletop Recognition module will reconstruct the clusters by the assumption of symmetric objects, and then compare the features between the clusters and the models in the database. Finally, Tabletop Recognition module will return a list which contains the candidates of the possible models that the clusters might be as long as the confidence of each guess.

We use the visualize Rviz provided by ROS to visualize the result.(fig 4.6) The yellow square is the largest plane we find in the environment, that is the table. The bottle and wineglass on the plane are recognized. The parts with shallow-blue are the mesh of the models in the database. If we overlap the database mesh on the 3D image, we can see that the recognition results are quite satisfiable.

(fig 4.6)

Semantic Map Editor

Our Semantic Map is store in OWL text format. The Semantic Map Editor is a tool that help us edit and visualize the semantic map. The picture shows the result that we load a off-the-shelf Semantic map OWL file.(fig 4.7)There are several columns at the left side, incuding Object Dimension, Object Position, Object ID, and so on. We can also insert an object or edit the Semantic Map manually with the editor.

(fig 4.7)Semantic Map Editor

Navigation

Gmapping

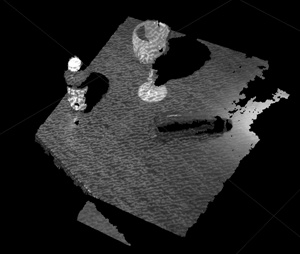

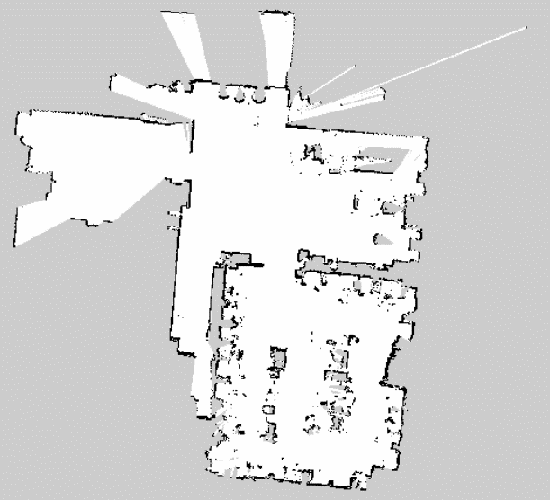

By using base laser to scan the environment, the robot can detect where the obstacles and where the places you can pass are. The Gmapping module take in the base laser sensor datas and use Rao-Blackwellized particle filer and Extended Kalman Filter(EKF)algorithms to localize itselves in the environment, while at the same time building up the map.

The procedure that we construct the map of the laboratory is by tele-operating the robot around. While moving around, the robot is doing mapping and localization simultaneously. After walk the robot around the lab, we can see from fig 4.9 that the edges, walls, and obstacles of the lab are constructed and labled with black lines in the map, whilst the white area is the place that robot can pass freely.

(fig 4.9)The map constructed by Gmapping

SLAM+Navigation

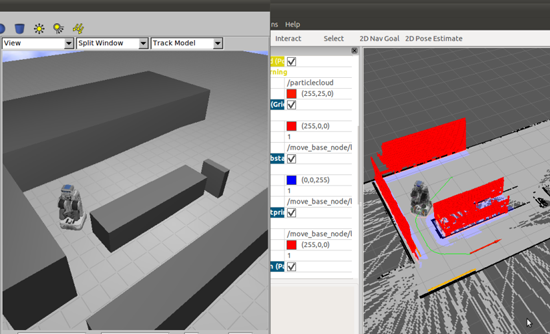

The robot is capable of building a grip map and localizing itself while navigating through environments. This technique is accomplished by making use of pr2_2dnav_slam package.

There are several techniques exploited in pr2_2dnav_slam package. To build a map and localize the robot, adaptive (or KLD-sampling) Monte Carlo localization approach and EKF were adopted. After the map built, pr2_2dnav_slam package equips the robot with the ability to navigate through a dynamic environment by implementing two path planners, local planner and global planner. Global planner computes the cost map first, and then computes the shortest path by using Dijkstra algorithm. On the other hand, local planner uses Dynamic Window Approach.

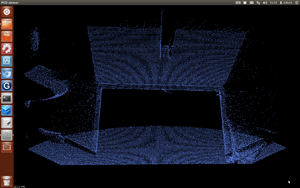

Currently, we can build a virtual environment including a robot in Gazebo (a 3D simulator features strong simulation of physical properties), and set the destination in Rviz (a visualizer enables to show the map seen by robot), that is, make the robot move to the destination. While moving, the robot is capable of building a grid map and generating a path without any collision with obstacles simultaneously.(fig 4.10)

(fig 4.10)SLAM+Navigation