Gestonurse: A Robotic Scrub Nurse that Understands Hand Gestures

Up to 31% of interactions between surgeons and scrub nurses in the operating room (OR) involve errors that can have negative effects on patients. The proposed research will reduce the morbidity risks to patients due to communication failures and retained surgical instruments (instruments being left in patients) by introducing a robotic scrub nurse that responds to hand gesture commands. This research provides an accurate and rapid method of detecting the need for specific surgical instruments (through gestures), thus increasing efficiency and lessening the risk of complications.

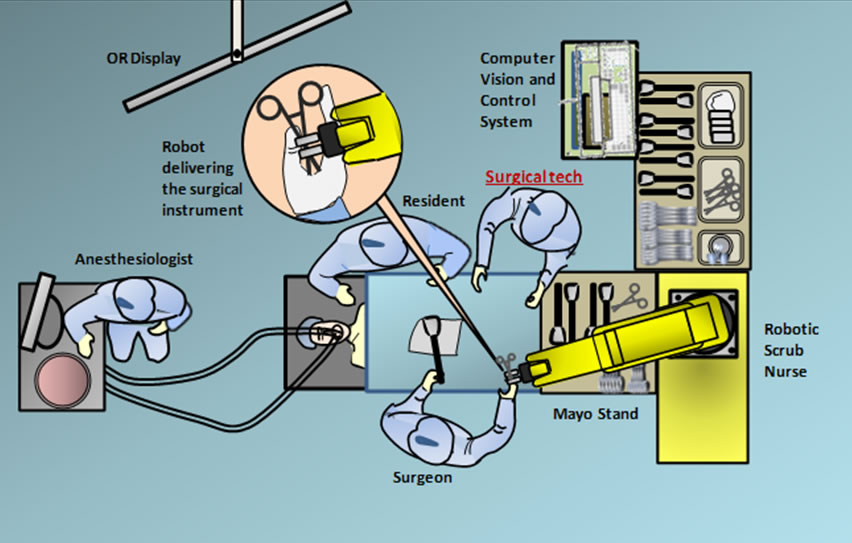

While surgeon–scrub nurse collaboration provides a fast, straightforward and inexpensive method of delivering surgical instruments to the surgeon; it often results in ‘‘mistakes’’ (e.g. missing information, ambiguity of instructions and delays). It has been shown that these errors can have a negative impact on the outcome of the surgery. These errors could potentially be reduced or eliminated by introducing robotics into the operating room. A robotic scrub nurse has the potential to reduce errors in the OR by automating the passing of surgical instruments which allows personnel to focus on more complicated tasks such as maintaining a sterile environment, preparing required surgical supplies and monitoring the state of the patient. This allows a possible reduction of errors due to communication failures. These failures can lead to wastage of resources, procedural errors, delays, distraction and inefficiency. A real-time robotic scrub nurse dedicated to passing surgical instruments to the surgeon by speech and/or gesture is presented. An advantage of gesture interaction is that it is not affected by ambient noise and does not require surgeon re-training since hand signals are used by surgeons to request surgical instruments as part of standard OR procedure.

While surgeon–scrub nurse collaboration provides a fast, straightforward and inexpensive method of delivering surgical instruments to the surgeon; it often results in ‘‘mistakes’’ (e.g. missing information, ambiguity of instructions and delays). It has been shown that these errors can have a negative impact on the outcome of the surgery. These errors could potentially be reduced or eliminated by introducing robotics into the operating room. A robotic scrub nurse has the potential to reduce errors in the OR by automating the passing of surgical instruments which allows personnel to focus on more complicated tasks such as maintaining a sterile environment, preparing required surgical supplies and monitoring the state of the patient. This allows a possible reduction of errors due to communication failures. These failures can lead to wastage of resources, procedural errors, delays, distraction and inefficiency. A real-time robotic scrub nurse dedicated to passing surgical instruments to the surgeon by speech and/or gesture is presented. An advantage of gesture interaction is that it is not affected by ambient noise and does not require surgeon re-training since hand signals are used by surgeons to request surgical instruments as part of standard OR procedure.

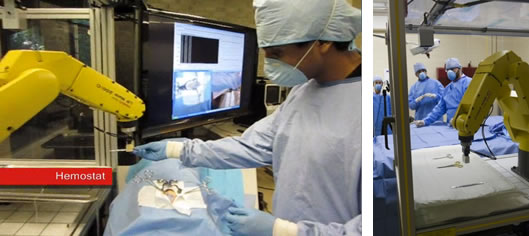

A robotic scrub nurse capable of handling and passing surgical instruments, called Gestonurse, was tested during a mock surgical procedure at the Large Animal Hospital at Purdue University. This robot uses real-time hand tracking and recognition based on fingertip detection and gesture inference. In an in situ experiment, the robot passed the surgical instruments to the main surgeon effectively and safely, without interfering with his focus of attention. In addition to allowing natural interaction with the surgeon, Gestonurse provided the following features: (a) ease of use—the robotic system allowed the main surgeon to use his hands which are the surgeon’s standard working tool, (b) natural interaction—nonverbal commands issued through hand signals are fast and intuitive, therefore the robot should interact quickly and still be reliable (currently, Gestonurse can process images in real-time, can recognize speech commands, and handle the surgical instruments to the main surgeon within 4 s), (c) an unencumbered interface—the proposed robotic system does not require the surgeon to wear markers nor to attach microphones, and (d) reliability— The results showed that fingertips were detected and gestures recognized with 99% and 97% accuracy on average, respectively. Also the robot can pick instruments when they are as close as 25 mm from each other.

Juan Wachs, an assistant professor of industrial engineering at Purdue University

http://web.ics.purdue.edu/~jpwachs/gestonurse/